Performance Computing

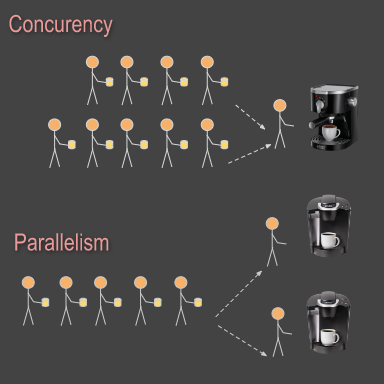

concurrency vs parallel

execution

There are cases when you can use the same subprogram to process a lot of data in parallel. These are data oriented applications suitable for parallelism. In other case you can have many relative independent subprograms that can be executed concurrently or asynchronously preventing your applications to block itself waiting for resources. Both methods will improve performance and responsiveness of your application.

Efficiency vs Performance

You should know, efficiency is not the same thing as performance. You can improve efficiency by using better data structures and algorithms. You can improve performance by improving efficiency this way. You can also improve performance by using more processing power. This is not necessary efficient, since you consume more power.

Multi-threading can have significant waste. You should actually use single thread applications most of the time. Multi-thread applications are difficult to build, hard to maintain and most of the time less efficient than single threaded applications.

Amdahl’s Law

The theoretical performance gain can be calculated by following the rule which is referred to as Amdahl’s Law. This law establish that there will always be a maximum speedup that can be achieved when the execution of a program is split into parallel threads.

Amdal's Law

S(n) = 1 / (1 - P) + P/n

- S(n) is the performance achieved by using n cores or threads.

- P is the fraction of the program that can be parallelized.

- (1-P) is the fraction of the program that must be serial.

We study parallel processing in Software Engineering course. If you are interested to find details you can study Bash scripting. In this article we will focus on multi-threading.

Race condition

Race conditions must be avoided. In multi-threaded applications this can induce significant problems. If possible race conditions are not prevented they can happen and produce non deterministic or incorrect results.

Preventing race condition using locks is possible but it can eventually produce another error called "deadlock". To avoid this you need to use "semaphores" and the problem is complex.

Java has implemented frameworks, classes and interfaces to deal with these problems and make Java trade-safe. You must learn what framework to use for different use cases you may have in your application.

Level of parallelism

- Multi-processing: Is based on multiple processors or multi-core /CPUs. Each process is executed on a different core. Memory is not shared between processes.

- Multi-tasking: Multiple tasks running concurrently on a single CPU. The OS executes these tasks by switching between them very frequently. A task can be a thread or a process.

- Multi-threading: You can divide the same program into multiple parts called threads and run those concurrently or in parallel. Threads are sharing the same memory space.

Processes vs Threads

Let's make distinction between definition of terms. Understanding the definition will improve our ability to chose between methods of parallelization:

Process

A process is a job that execute a program. A job has its own address space, a call stack, and handlers to resources such as open files or internet connections. A computer system normally has multiple processes running at a time. The OS keeps track of all these processes and facilitates their execution by sharing the processing time of the CPU among them.

Thread

A thread is a logical path of execution within a process. Every process has at least one thread called the main thread. This can create additional sub-threads. All threads created by a process share the same resources including memory and open files. Every thread has its own call stack created at runtime.

Multi-Process

One strategy for parallelization is to split data and create multiple processes that run in parallel using multiple Java instances. This strategy works well with system languages like C, Fortran, Rust, Nim not for Java. JVM needs time to load and can occupy large amount of memory to be loaded multiple times.

Parallel System

From my experience

I have worked with a code-base for Nokia that was using Apache Ant to run parallel processes to create digital maps for different regions. This solution was causing server overloading and overall low efficiency. Balancing the server was done by using a job scheduler but the process was difficult to optimize and control.

Resource depletion

When you use Java multi-threading with process parallelization the result can be disastrous. Instead of gaining performance you can jam the server. Choking a server lead to frustration of entire team and eventually can have catastrophic consequences.

Benefits of Multi-processing;

- Higher throughput;

- Code is relative simple;

- Easy to debug using single thread;

- You can switch from single to multi;

Problems of Multi-processing

- Takes more memory to run;

- Inter-process communication is difficult;

- Can lead to resource depletion;

- You may need many database licenses;

Multi-threading

Multi-threading is realized in a single process. Before diving in examples let's review the advantages and disadvantages of this strategy. Maybe you don't need to learn all this if you don't actually have critical bottlenecks in your process.

Benefits of Multi-threading;

- Higher throughput;

- More responsive applications;

- More efficient utilization of resources;

Problems of Multi-threading

- More difficult to find bugs;

- The higher cost of code maintenance;

- More demand on the system;

- You can use a single database license;

Java Threads

There are two options for creating a thread in Java.

- Option 1: Create a class that inherits the Thread.

- Option 2: Create and use a Runnable objects.

Note: Each thread is created in Java 8 will consume about 1MB as default on OS 64 bit. You can check via command line: java -XX:+PrintFlagsFinal -version | grep ThreadStackSize.

Executor Framework

To create and manage Thread in Java you can use the Executors framework. Java Concurrency API defines three executor interfaces that cover everything that is needed for creating and managing threads:

- Executor: launch a task specified by a Runnable object.

- ExecutorService: a sub-interface of Executor that adds functionality to manage the life-cycle of the tasks.

- ScheduledExecutor: a sub-interface of ExecutorService that adds functionality to schedule the execution of the tasks.

Executor Service

Oracle references:

Asynchronous execution

Synchronous vs Asynchronous

Asynchronous programming in Java can be done with a special feature named: CompletableFuture. This is implements two interfaces: Future and CompletationStage. It provides a huge set of convenience methods for creating, chaining, and combining multiple Futures.

Use Cases

Asynchronous execution is suitable for applications that request resources. When reading a resource is slow, the a normal application is frozen for a second waiting for a resource to be read. This make an application "unresponsive".

The main application can do other things and can receive a notification when the resource was read so it takes the result and is using it, for example to render a page or to make a computation and display the final result.

Synchronize

When multiple tasks do not depend on each other, the asynchronous execution can run multiple tasks in parallel threads. The main application can finish before the threads end. This is unusual. A better way is to suspend the main application and wait for threads to finish before exit.

More details: Oracle Completable Feature

Parallel programming

Unlike multi-threading, where each task is a discrete logical unit of a larger task, parallel programming tasks are independent and their execution order does not matter. There are two design patterns you can use:

- functional parallelism: the tasks are defined by different functions;

- data parallelism: the tasks are defined by different data sets;

Parallel streams

Java 8 introduced the Stream API that makes it easy to iterate over collections of data. Streams can be used for creating parallel threads. Unlike normal collections, streams are trade safe.

A stream in Java is simply a wrapper around a data source, allowing us to perform bulk operations on the data in a convenient way. It doesn't store data or make any changes to the underlying data source. Rather, it adds support for functional-style operations on data pipelines.

List<Integer> listOfNumbers = Arrays.asList(1, 2, 3, 4);

listOfNumbers.stream().forEach(number ->

System.out.println(number + " " + Thread.currentThread().getName())

);By default a stream is executed in single thread. Java streams can be transformed from sequential to parallel. You can achieve this by adding the parallel() method to a sequential stream or by creating a stream using the parallelStream() method:

List<Integer> listOfNumbers = Arrays.asList(1, 2, 3, 4);

listOfNumbers.parallelStream().forEach(number ->

System.out.println(number + " " + Thread.currentThread().getName())

);Map-reduce pattern

Bulk operations can be parallelized. For this you can select specific data from a dateset, split the data set in slices, process the slices in parallel then compute the final result using an aggregate function. You can chain together all these operations in single line (functional style) using dot operator.

Java Stream

Fork-Join framework

The fork-join framework is in charge of splitting the source data between worker threads and handling callback on task completion. This was added to java.util.concurrent package in Java 7 to handle task management between multiple threads.

List<Integer> listOfNumbers = Arrays.asList(1, 2, 3, 4, 5);

int sum = listOfNumbers.parallelStream().reduce(0, Integer::sum);

assertThat(sum).isEqualTo(15);Number of threads

The number of threads in the common pool is equal to the number of processor cores.However, the API allows us to specify the number of threads it will use by passing a JVM parameter.

-D java.util.concurrent.ForkJoinPool.common.parallelism=4

Custom threads

You can use custom threads for critical parts of application that require processing on a particular number of threads. Note that using the common thread pool is recommended by Oracle. We should have a very good reason for running parallel streams in custom thread pools.

List listOfNumbers = Arrays.asList(1, 2, 3, 4);

ForkJoinPool customThreadPool = new ForkJoinPool(4);

int sum = customThreadPool.submit(

() -> listOfNumbers.parallelStream().reduce(0, Integer::sum)).get();

customThreadPool.shutdown();

assertThat(sum).isEqualTo(10);

Read more: Oracle Parallelism

The End

Congratulation. You have finish our course. Now you should take a prep quiz. Your journey to be a Java professional programmer have just started. We look forward to work with you on projects.

Ready for: Prep Quiz